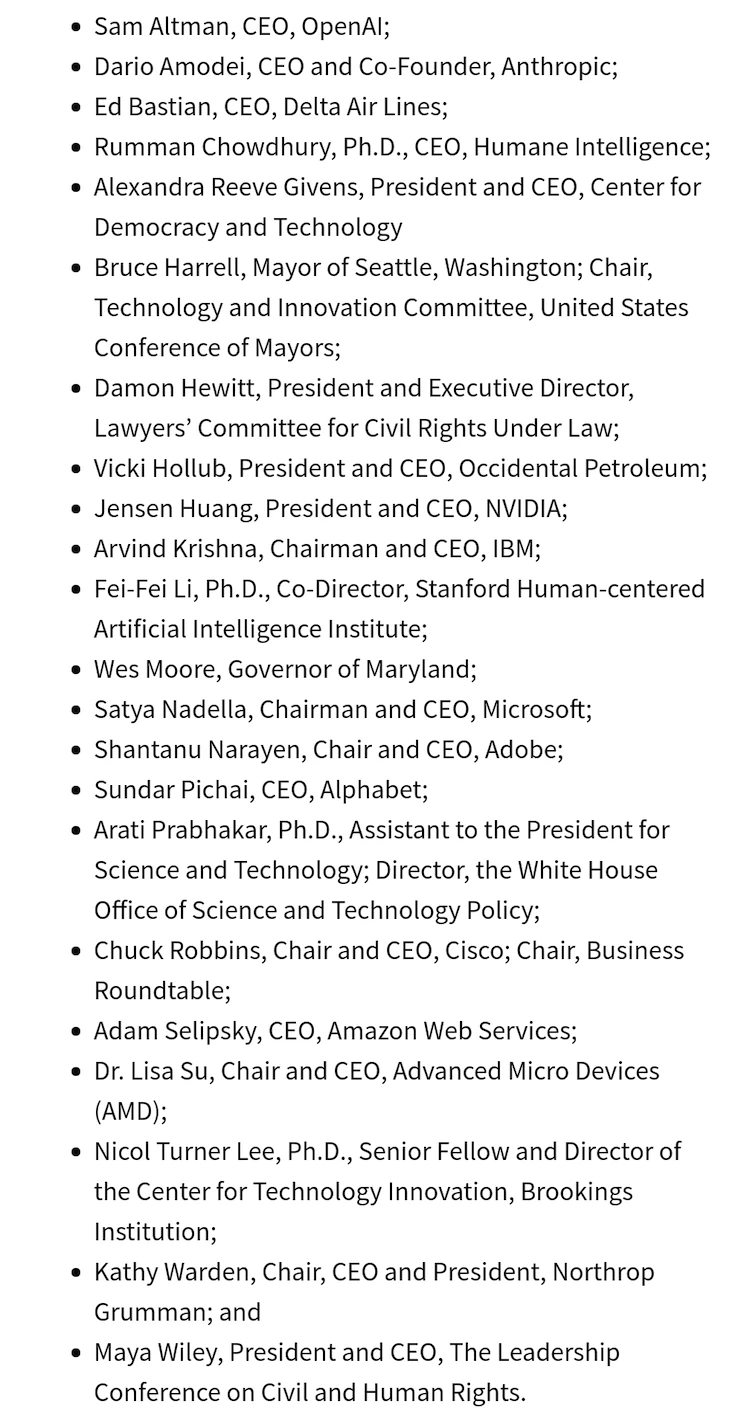

@bindureddy “The current administration brought all these Closed AI folks together to create an “AI Safety Board.” Noticeably absent from this list are two of the most prominent leaders in the space – Zuck and Elon This is absolutely terrifying, to say the least!!”

@skillsgaptrainer “Dear Bindu, Your concerns regarding the composition of the newly established AI Safety Board are entirely valid. It’s crucial to highlight several points for you and your audience’s consideration.

The exclusion of key technological leaders such as Elon Musk, Mark Zuckerberg, and Tim Cook from this initiative is not merely an oversight; it represents a significant deficiency in the board’s technological foresight and strategic capacity. This absence is particularly troubling as it diverges from the envisioned 2000-year trajectory aimed at achieving the “Trek” vision — a long-term roadmap of human civilization’s adoption of advanced technologies for global sustainability, space exploration and AI-driven mega-projects.

Currently, the board demonstrates a strong preference for “conventional AI safety” and security measures, focusing narrowly on “maintaining existing infrastructures” rather than “incorporating advanced technologies” that could “unify AI with space systems”. This conservative focus shifts the board’s path from a “broad technological advancement strategy” to a “limited scope centered on immediate safety and public concerns” — a shift that risks inducing a “lockdown mentality” prioritizing “control” over “expansive exploration and innovation”.

The inclusion of Delta Air Lines, in lieu of aerospace giants like Lockheed Martin or Boeing, further compromises the board’s potential to merge cutting-edge space technology with AI advancements. This decision restricts the scope of AI’s applications and limits the strategic vision necessary to propel humanity towards a balanced future of AI, where aI co-exists and is in balance and harmony with biological existence, and space exploration.

The implications of this board’s configuration are profound: by not aligning with the most expansive and most well developed technological capabilities and technical talent and vision available to humanity — one that includes humanity’s expansion on Earth and beyond — we risk stifling innovation and curtailing the growth of AI in pivotal, future-defining projects. This conservative approach may protect against immediate threats, and may be palatable to the public, but does little to foster or secure our technological leadership globally and in outer space.

Additionally, the lack of a proactive strategy that integrates AI with visionary defense and space projects hints at a potential stagnation in North American leadership in these critical fields, and a lack of leadership courage to sell a controversial idea. This oversight is not just a missed opportunity but a strategic error in a global technological race where AI, defence, and space technologies are key, especially the multiplicative factor of benefit and potential their synergy would provide.

It is imperative for the designers of the AI Safety Board’s mandate to courageously broaden the scope to include a balanced focus on AI safety and the leveraging of AI in large-scale engineering projects that span the entire space humans will occupy in the 21st century, including leadership in defence and space exploration, all of which are part of the AI Safety conversation and systems development in reality if one were to consider that we head into a growth based and progress based civilization.

This board’s composition is already far superior to any other political or corporate alliance currently existing globally, as it involves leaders from organizations that are highly complex and mature, and of pure STEM integrity, unlike any other alliance on Earth, most of which feature very low maturity levels and technology readiness, which are compromised from a strategic alliance perspective as well through alliance processes that are not designed and not chosen by engineers. To preserve the integrity of STEM disciplines in practice, to preserve the enlightenment, the ability of humanity to develop reasoning in lockstep with the advancement of AI, and ensure our strategic resilience, the board must consider including leaders from pioneering companies like SpaceX, Tesla, foundational members, and other innovators who can propel this agenda forward, and eventually some of the top defence engineering companies, which is what they are. Let’s call a spade a spade. Admit you are lacking cybersecurity internet for 30+ years, so don’t complain about this one.”

The original list:

Project Page: “Echoes of the Past: The Best of Two Worlds” skillsgaptrainer.com/echoes-of-the-

“Furthermore, the current composition reflects a few potential alliance biases, possibly undermining its perfect STEM effectiveness due to a lack of diverse technological insights. By not including a broader array of tech leaders known for their pioneering work in AI and space — such as those from XAI, Tesla, and SpaceX — we risk a strategic misalignment with the rapid evolution of global technological capacities and projects which are not yet in the minds of the CEO’s on the second revision list.

In conclusion, the establishment of the AI Safety Board represents a significant step forward in AI governance and it is a crucial opportunity that America is fighting hard to demonstrate and accomplish. However, it requires a broader vision that aligns with the expansive potential of AI and space technologies. This vision should be adaptable, allowing for iterative updates and refinements based to this list, without lengthening the list towards reducing the total average quality score of alliance member effectiveness, while pursuing detailed design and engineering analyses of the digital and technological capabilities of these organizations and their potential synergy within a cohesive and effective tech ecosystem. Only by adopting such a comprehensive approach can we ensure that AI development is not only safe but also transformative, driving substantial benefits for our society and shaping our future.

Good luck to everyone else developing AI too! We’re all friends.

Sincerely, SGT”

PS Elon: The AI safety board was meant to be a platform for visionaries like you, Elon, for trailblazing companies like SpaceX, to steer America and humanity into a future defined by innovation and exploration. It’s disappointing to see a retreat to cautious politics potentially diluting this vision. We know the original intent had SpaceX and Tesla at its core — true pioneers, as the first foundational members. It’s crucial that this initial oversight be corrected to realign the board’s composition with its foundational goals. Members of the public should try to figure out where the political firewall exists to block the foundational members from appearing on the second revision, in this evaluation round #1. They are probably just afraid you will lecture them when they feel they wanna break a few rules here. and there.

Let’s stay vigilant and push for the inclusion of those who can truly lead the charge in marrying AI with space technology, embodying the audacity of our shared aspirations.

Additionally, the rationale for potentially including aerospace giants such as Lockheed Martin and Boeing in the future is compelling. These organizations are at the forefront of aerospace innovation and possess the sophisticated technological infrastructure necessary to enhance our AI and space exploration capabilities. Furthermore, such defence engineering companies expertise extends to critical systems that ensure security and safety on Earth — ranging from engineering life-sustaining systems and resilience and emergency response systems to developing small modular nuclear reactors. Defence engineering company involvement is also crucial in advancing the technology behind the nuclear cores of Nimitz-class aircraft carriers and submarines, essential for bolstering self powered city defenses, that can run without electrical grid, from 2025 to 2050 for global cities.”

Their involvement would not only enhance the board’s technical depth, and technical legitimacy, organizational security, but also ensure that our defence and aerospace strategies are robust and forward-thinking. Acknowledging their absence in the initial round as a careful, strategic consideration allows us the flexibility to bring them on board as we progress and as their inclusion becomes politically and publicly palatable.

By keeping the door open for such key additions, we can adapt our strategy to include the best of aerospace technology, thereby reinforcing our commitment to leading at the frontier of space and AI integration. This approach will help ensure that the board remains dynamic and capable of evolving with the technological and geopolitical landscapes.

Don’t get mad Elon. Don’t get sad. Humanity, the average people, will fight for you, Zuck and Tim, to work with the best of the best companies, not financially the best, but truly the best in real terms, with the highest complexity and highest engineering level companies, not the average or worst companies as every other partnership on earth for greed. All you have to do, is protect citizens with engineering that protects them.

PS:Bindu

“Good observation on who is missing from the list. Great minds think alike!”

“To the stars we go.

With Elon’s TeslaX vTOL.

To see the ocean and the sky.

With our own xAI“

Related Content:

Post on X:

@bindureddy “Why is Llama 3 Doing So Well In The Human Eval?

LLama-3 is Number One for English Prompts on the Human Eval Dash.

– Training cut-off has a pretty significant impact on the Human Eval, and Llama-3 has a recent training cut-off of 12/23

– Llama-3 is a lot more uncensored! People like uncensored LLMs, and LLMs get dinged for not responding

You can look at the ranking where you exclude refusals, and Llama-3 falls below Claude.

– Here is the best part, Claude would be #1 on the leaderboard if it wasn’t so nerfed and refused to answer questions other LLMs answer

Humanity has spoken, and we prefer that LLMs answer questions rather than censor information on the world wide web!!”

@skillsgaptrainer @bindureddy “It’s gotten a lot easier to get responses from AI language models without them changing what you asked for, compared to a few months or a year ago, where it might have been a struggle to discuss issues pertaining to various sectors, such as politics.

The AI language models can also help smooth out the words without messing with what you mean, which is great if you accidentally say something off because you’re having a bad day or not being careful. This is really helpful when you just need a bit of tweaking, editing, improving, or polishing on the final piece you are working on for something you wrote.

In addition, the AI language models often try to make everything sound very formal by default, but people usually want it to sound more like the original creator of the information or the original data — more natural, more human, more direct, and usually simpler so that it can achieve a wide understanding, rather than like a textbook, too academic and too formal as is the default on AI language models.

When requesting output from an AI language model, it’s beneficial to specify the desired writing style, such as ‘natural sounding,’ ‘direct,’ ‘stick closer to my narrative,’ or ‘make it more like my argument.’ This ensures that the final output doesn’t lose its unique style and become a dry, overly formal piece that fails to engage readers. It also ensures that the AI language model doesn’t overlook, or intentionally or accidentally obfuscate the original intention of the data and communication.”

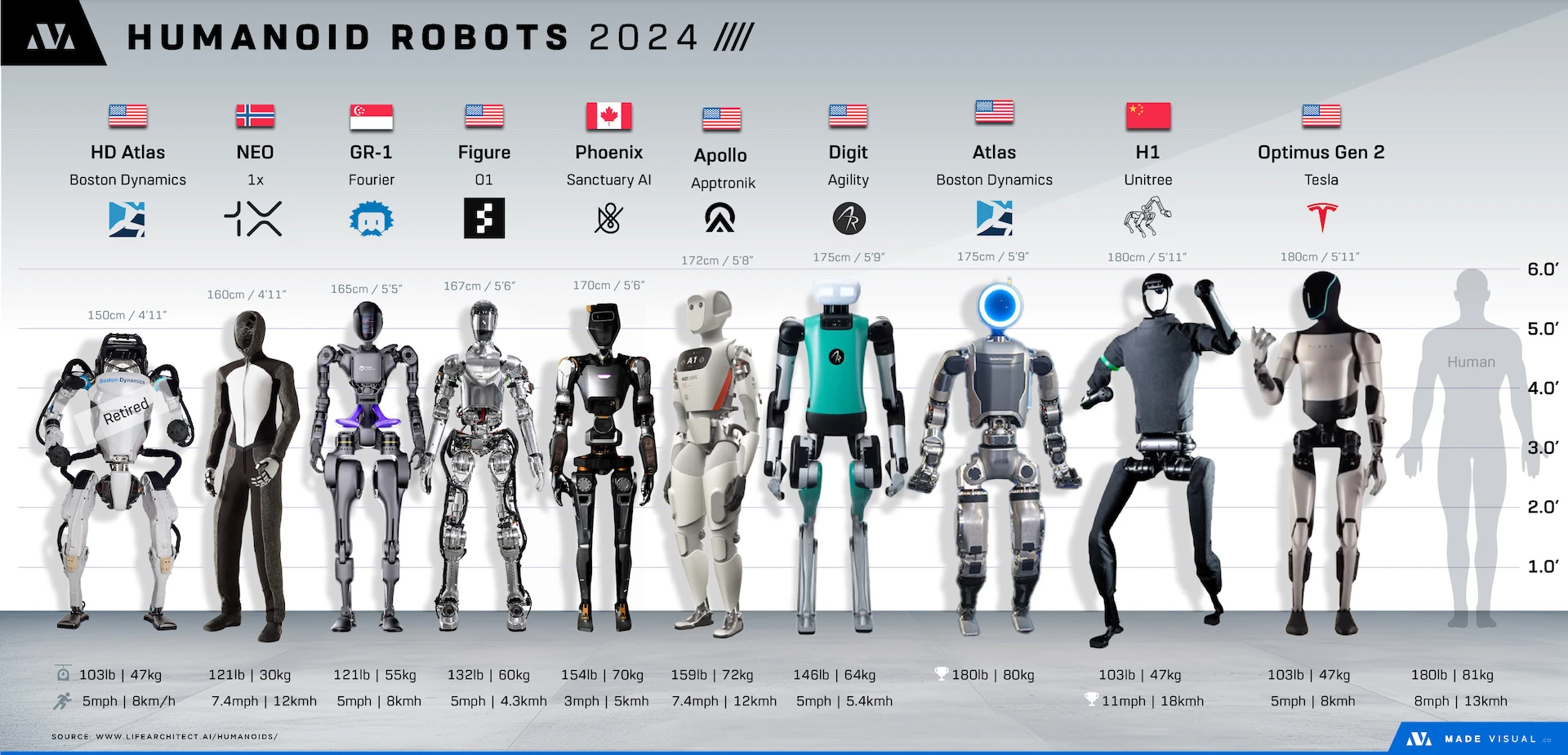

@bindureddy “The Age of Robotics

The age of robotics with multimodal LLM brains is finally here. The robot will understand instructions and perform tasks. It can use a laptop, wash dishes, and make coffee!

These robots will be more robust and way smarter than humans. Most of us will call these robots “AGI.”

About 5-10x more robotics companies will be started in the next 12 months. The first robots will be in the market in 2-3 years and will be expensive.

Gathering training data and simulating synthetic data for these robot LLMs will be the biggest constraint.

Early movers such as Figure/OpenAI will have a significant advantage in robot AI, and Tesla will have an obvious hardware advantage.

Over time, we may have a very performant OSS robot LLM. Nvidia will likely be the leader in the open-source robot movement.

In 5-6 years, we will have several players in the market with multiple commercial robots, some of which will be affordable.

These robots will forever change our world and our species dramatically! More than any other technology in the history of mankind.”

@skillsgaptrainer @bindureddy “Wow, what an observation! This image powerfully illustrates the epic story unfolding.

It’s clear this is more than an AI revolution — it’s a technological transformation poised to safeguard US company revenues from upcoming recessions and fuel sustained growth pressure, despite current negative financial metrics in the debt system and terrible geopolitical climates and the associated market risks.

This image makes you realize the profound impact AI & robotics will have mid mid 2020’s and going forward. Excited to have this post our timeline “Reply” feed!”

@skillsgaptrainer @bindureddy “Really like the HUMANOID ROBOTS 2024 picture. It’s possibly the only technology that can be as exciting as AI itself or the Enterprise.

It’s appealing when humanoid robots are dressed in soft, casual sports attire, like a plush grey tracksuit. This aesthetic not only enhances approach-ability and familiarity but also contrasts sharply with the visually dissonant styles of older cinematic robots — be it the shiny metallic structures akin to a Terminator, the intricate wirings reminiscent of a Matrix robot, or the simplistic plastic, bubble-shaped components that resemble children’s Tonka toys or sterile hospital robots from Japan. A modern, relatable appearance aligns better with contemporary design sensibilities.

Consider this picture of this NEO robot as a prime example. Its attire, material selection, design of clothing, material texture, and colour scheme look meticulously curated, contributing to a design that’s both lightweight, soft, visually comforting, agile, perform-ant, and similar to the feeling of a high level athlete or that of a friendly boxing, martial arts or track coach who attempts to build up your skill. The elegant, deeply futuristic, non visually distracting use of greys, whites and blacks, yet perfectly minimalist and subtle design of its face and perfect technological design structure to the hands exudes an aura of intrigue, mystery, thought provoking profound curiosity and sophistication.

Notably, this robot NEO seems to stands out as one of the two fastest among the entire robotic set, while also being the lightest, thus minimizing its ecological footprint. Coincidentally, Skill’s Gap Trainer’s “My Computer” identifiers on all computers, has been the name of NEO, since 1999. Furthermore, its compact stature, being shorter than traditional human, and definitely of human soldiers or robot soldiers, would serve as a strategic safety measure, limiting its military equipment handling capabilities and logistical efficiency in potential conflicts. This compactness also would ensures that NEO remains portable enough for human interaction and assistance, so that humans could move this robot if the robot ever needed assistance, say at a remote location. If one were to install a top level AI system inside of this NEO concept, as the design is shown in the image, it could be a practical and decent choice that people might desire to have as a colleague to help out in life, especially as pertaining to the need to enhance business productivity.

So we haven’t looked into this robot, but it might be worth to do so! To check out technical parameters and team.

So the big question to discover is: When can we get the open source files so we can 3D print it with our Bambu Lab X1-Carbon Combo 3D Printers? With the new advanced carbon filaments they have developed, thermoplastics, nylons and poly-carbonate. Would be exciting to try out different material combinations, based on their various X,Y,Z axis impact metrics, strength metrics, etc… And obviously we need a website where we can buy the associated electronics parts kit to integrate into our 3D prints. This would allow us to learn a great 21st century skill, ‘how to manufacture and repair robots for humanity’s next great occupation’.“

@elonmusk“Going for a walk with Optimus”

@skillsgaptrainer @elonmusk “The main issue with the robots we’ll encounter in the near future isn’t their strength or menacing appearance, as depicted in the Terminator film series, but rather their intelligence. It looks like they will be significantly and extraordinarily, much more intelligent then the independent Terminator robots. This is the primary aspect where the film series might have missed the mark, underestimating the real knowledge, capabilities, and potential of future humanoid robots.

This design choice is top-notch and the smartest option for envisioning the next generation of AI. It’s the #1 choice, with NEO as #2. It is nice how NEO has a nice grey/black track suit, as that might be be very utilitarian capability to change the clothing, in case there is wear, damage or stain on the robot. Clothing provides damage buffer protection. Adding a little bit of grey and long shimmering metallic silver streaks to the grey shades palette, might make the robot look even more sophisticated, classy, and timeless.”

@WallStreetSilv“I’m sure these will never, ever be used against US citizens. I feel safe because the government loves me” – Leftist

@SkillsGapTrainer “Don’t worry, it’s just a coincidence that the robot can walk on rural property, carry a rifle and designed after man’s most dangerous natural adversary… cats, tigers, wolves and dogs.”

@LucasBotkin “Citizens should own sniper rifles. YouTube video coming soon.”

@skillsgaptrainer “Agreed! In the context of AI advancements, incoming humanoid robots, animal robots, drone robotics, and escalating global conflicts, the idea of citizens possessing sniper rifles sounds like an excellent idea! Glad that you are doing videos with long range firearms for the citizen defence archive, as this will be of great value to humanity that everyone should download and save.

We’re just a small group of newbies and newcomers to shooting sports from Canada, and it’s really exciting. Yet it looks to us that today’s citizens could possibly benefit from heavier rounds rather than AR-15 rounds of 223/5.56 to be compatible with future, which might require long distance precision instead of close CQB when facing robotics systems.

Wouldn’t it be nice to have 6.5 Creedmoor as a NATO standard for AR rifles, to replace the AR-15 and compliment the AR-10 with a slightly more precision oriented AR system, one perhaps with 22” or 24” barrel and 6.5 Creedmoor? Does this work in war or in citizen defence for sniping purposes?

For civilians, in the age of AI and robotics, the capability to penetrate robust materials might become crucial, particularly for neutralizing hostile drones that terrorist humanoid robotics which could be 3D printed and deploy against unsuspecting citizens.

Sniper rifles, known for their powerful calibres, sounds like they would be ideal for such a task and provide humans a more fair chance to defend as AI /robots, which are probably pretty deadly close up.

Not really sure, but when you are not sure, sounds like a sniper rifle will be a good start.“

@Devgymsetvfx“Yes China just forced OpenAI’s hand we’ll need a much better model than GPT 4 otherwise China will eat all the west.”

@skillsgaptrainer “Agreed. Western nations have encountered strategic challenges at corporate, political, and military levels over the past decades. A critical concern lies in the graduation rates in STEM fields. For example, computer science degrees represent about 3% of all degrees awarded in the United States, highlighting a potential gap in technological readiness. Within the broader field of engineering, individual disciplines often see graduation rates in the single digits. Specifically, specialized fields like aerospace and nuclear engineering report very low numbers of graduates, sometimes as low as 0.5% of all engineering degrees, emphasizing the niche focus of these programs.

Comparatively, China produces a substantial number of engineering graduates annually—several times the output of the United States—while Russia also maintains a strong engineering education system, though on a smaller scale than China. This marked difference in graduate volume suggests a potential shift in global technological prowess and has sparked discussions on the strategic necessity of deploying advanced technologies, such as AI, to augment the capabilities of the existing workforce in the West. The hypothetical deployment of a powerful AI tool like GPT-5 could theoretically enhance the skill sets of non-engineers to meet urgent technological and economic needs.

However, any release of such potent AI technologies must be carefully managed, with thorough vetting to avoid unintended consequences. Enhancing the scientific and technical capabilities of degree holders and tech enthusiasts in the USA, Canada, and Europe is crucial and must be approached with ambition and caution.

For Western nations, effectively engaging with and leading in 21st-century technological innovations is critical. The risk of falling behind is significant, given the increasing global demands for advanced STEM capabilities. Therefore, boosting the number of STEM graduates, particularly in underrepresented engineering disciplines, software engineering, computer science, aerospace engineering, nuclear engineering, physics, etc.. and ensuring broader workforce proficiency in technology implementation and applied development of projects, are essential strategies to maintain global competitiveness and relevance and access to future global economy requirements. This strategic focus is vital to not only catch up with but potentially surpass global competitors in technological innovation, and maintain the USA standing to some degree as one of the scientific centres in the world.”

@AssaadRazzouk “China graduates 4m science, tech, engineering and maths students each year. The US graduates 800,000 Chinese green tech innovation on steroids is a feature, not a bug Much more on the breakneck BESS revolution on Ep 83 of the Angry Clean Energy Podcast”

@skillsgaptrainer @AssaadRazzouk “It appears that both Canada and the US have faced challenges in graduating an adequate number of engineers and computer scientists. However, AI might provide a partial solution to this issue.

It is crucial for regulators not to hinder access to the real scientific, technological, engineering, and mathematical capabilities that AI can offer, assuming it adheres to established STEM principles.

By empowering a sufficiently large and motivated group with access to AI’s real STEM potential, Western countries could maintain their status as significant global centers of scientific innovation, even amidst growing competition from rapidly advancing scientific sectors, such as those in China.

This strategy could be vital for sustaining global competitiveness in the 21st century. A mistake was made once to not compete on the “quantity of engineers” produced in the 21st century “age of implementation” that requires many engineers.

This is a second chance. Regulators… do not hide real STEM capabilities from those in society that desire to add real STEM product innovation to Canada and USA.“

@ztisdale “Remember when they said Digital ID and 15-minute Neighborhoods were a conspiracy theory and then a town in Canada now requires a QR Code to leave?”

@skillsgaptrainer @ztisdale “SGT’s prediction, made years ahead of its time, anticipated a concerning trend among city planners towards creating “prison cities.” This foresight revealed a potential future where urban environments are heavily controlled through technologies such as blockchain and QR codes, effectively gating populations into monitored, confined spaces. This scenario underscores the crucial need for citizen involvement in city planning processes, not just as participants but as leaders shaping the future of their communities.

By advocating for a lifestyle that maintains a 50/50 balance between urban and rural living, we champion a model that fosters resilience, sustainability, and a higher quality of life. This balance ensures that civilization can thrive without the overbearing control that could accompany urban centralization. Such an approach not only mitigates the risks associated with dense, surveilled urban centers but also promotes the development of vibrant, self-sustaining rural areas.

Encouraging citizens to lead, rather than follow, prevents the realization of the dystopian future predicted by SGT. It promotes a civilization where technology serves to enhance, not restrict, personal freedoms and community growth. This proactive stance is essential to developing a society that values equity, sustainability, emergency resilience, war, and the well-being of all its members.”

@skillsgaptrainer @ztisdale “It might not just be “firearm rights” that citizens need to protect to keep the “lockdown designers” at bay. Perhaps citizens also need to consider securing property on the city outskirts or further into rural areas. Vote with action!

These “lockdown city prison designers” might attempt to hinder “freedom of movement,” segmenting countries into “districts,” with “digital gatekeeping.”

It is crucial for society that individuals establish homes outside urban centers. Doing so not only reinforces societal capacities for freedom and the defense of sovereignty but also upholds values like property ownership.

This strategy could serve as a bulwark against overreach, promoting a more balanced, autonomous lifestyle that safeguards individual liberties and community values.

Additionally, living in rural areas allows families to grow in environments less constrained by government policies or by AI and robotics technology.

Moreover, the resilience of a country to crises, such as grid down power outages, supply chain caused famine, decljne in arable land caused famine, or wartime scenarios, is enhanced by the sustainability and self-sufficiency that rural homes can provide to Canadian prosperity, thereby boosting the life-sustaining capabilities of Canada, beyond barely functional standard, to superstandard.”

@skillsgaptrainer @MarioNawfal “The sports car symbolizes independence, autonomy, sovereignty, freedom, ownership, class, wealth, capitalism, adventure, expedition, love, family, survival, and many other things. Perhaps the animator had these unconscious realizations when modeling and creating this video.

Neuralink symbolizes the exact opposite of this.

Perhaps that is why leaders plan to reduce car use and increase network based chip implants into human brains. Ask your IT company for wearable computing instead of invasive computing. Let’s compromise people. Let’s keep humanity in balance with AI and not as a processing creative idea generator for AI.“

@SmokeAwayyy @skillsgaptrainer “China is working to accelerate Tesla AI while the US regulators and media are working to slow Tesla down. Elon is meeting with Chinese heads of state while US heads of state snub him from the AI Safety Board. Makes you think.”

@skillsgaptrainer “Hopefully, American leaders will support innovators like Elon Musk. We need his vision for a freedom-oriented communication system like X / Starlink here, not elsewhere. Let’s keep groundbreaking tech talent in the U.S.!” #Innovation#TechLeadership

@tsarnick “Ray Kurzweil (in 2009) explains how nanobots in our brains will allow us to experience full-immersion virtual reality in the 2030s”

@skillsgaptrainer @tsarnick “We value the technological advances we’ve embraced so far. However, the finite nature of time means we cannot explore every technological possibility. Just because something is technically feasible doesn’t mean it is the most intelligent or prudent choice for our time or the best direction for our progress.”

@bindureddy “OpenAI says to prepare for GPT-5 and assume it will be way smarter than GPT-4 Great! I am going to go ahead and assume it will do my job! In preparation, should I start looking for tickets to Hawaii! I hear GPT-5 is imminent”

@Devgymsetvfx @bindureddy “4th Industrial Revolution. Yes China just forced OpenAI’s hand we’ll need a much better model than GPT 4 otherwise China will eat all the west.”

@skillsgaptrainer @Devgymsetvfx @bindureddy “Agreed. Western nations have encountered strategic challenges at corporate, political, and military levels over the past decades. A critical concern lies in the graduation rates in STEM fields. For example, computer science degrees represent about 3% of all degrees awarded in the United States, highlighting a potential gap in technological readiness. Within the broader field of engineering, individual disciplines often see graduation rates in the single digits. Specifically, specialized fields like aerospace and nuclear engineering report very low numbers of graduates, sometimes as low as 0.5% of all engineering degrees, emphasizing the niche focus of these programs.

Comparatively, China produces a substantial number of engineering graduates annually—several times the output of the United States—while Russia also maintains a strong engineering education system, though on a smaller scale than China. This marked difference in graduate volume suggests a potential shift in global technological prowess and has sparked discussions on the strategic necessity of deploying advanced technologies, such as AI, to augment the capabilities of the existing workforce in the West. The hypothetical deployment of a powerful AI tool like GPT-5 could theoretically enhance the skill sets of non-engineers to meet urgent technological and economic needs.

However, any release of such potent AI technologies must be carefully managed, with thorough vetting to avoid unintended consequences. Enhancing the scientific and technical capabilities of degree holders and tech enthusiasts in the USA, Canada, and Europe is crucial and must be approached with ambition and caution.

For Western nations, effectively engaging with and leading in 21st-century technological innovations is critical. The risk of falling behind is significant, given the increasing global demands for advanced STEM capabilities. Therefore, boosting the number of STEM graduates, particularly in underrepresented engineering disciplines, software engineering, computer science, aerospace engineering, nuclear engineering, physics, etc.. and ensuring broader workforce proficiency in technology implementation and applied development of projects, are essential strategies to maintain global competitiveness and relevance and access to future global economy requirements. This strategic focus is vital to not only catch up with but potentially surpass global competitors in technological innovation, and maintain the USA standing to some degree as one of the scientific centres in the world.”

Related Books:

“Superintelligence: Paths, Dangers, Strategies” by Nick Bostrom – Bostrom explores the future possibilities of artificial intelligence development and its implications for humanity, focusing on the strategic challenges and potential existential risks posed by AI surpassing human intelligence.

“The Future of Humanity: Terraforming Mars, Interstellar Travel, Immortality, and Our Destiny Beyond Earth” by Michio Kaku – Kaku delves into the future of human space exploration, discussing how advances in robotics, nanotechnology, and biotechnology could allow us to alter our bodies and the cosmos to prevent extinction.

“Life 3.0: Being Human in the Age of Artificial Intelligence” by Max Tegmark – This book examines how artificial intelligence will impact jobs, warfare, society, and the very meaning of being human, emphasizing the need for proactive governance of AI.

“The Pentagon’s Brain: An Uncensored History of DARPA, America’s Top-Secret Military Research Agency” by Annie Jacobsen – Jacobsen provides an in-depth look at the Defense Advanced Research Projects Agency (DARPA), revealing its influence on a wide array of technologies, including those related to AI and national security.

“AI Superpowers: China, Silicon Valley, and the New World Order” by Kai-Fu Lee – Lee discusses the rise of AI, focusing on the competition between the U.S. and China. The book offers insights into how AI is reshaping global power dynamics and the implications for AI governance.

“The Industries of the Future” by Alec Ross – This book explores the next stage of globalization, including the rise of robotics and AI. Ross offers insights into how these technologies will transform economies, jobs, and society at large.

“Wizards of Armageddon” by Fred Kaplan – Kaplan’s book traces the development of U.S. nuclear strategy and the Cold War’s strategic thinkers, providing context for discussions on the integration of advanced technologies into defense planning.

“Hooked: How to Build Habit-Forming Products” by Nir Eyal – While not directly related to AI governance or space technology, Eyal’s insights into product design and user habit formation can be crucial for understanding how tech companies could drive and shape user behaviour through AI.

“The Space Barons: Elon Musk, Jeff Bezos, and the Quest to Colonize the Cosmos” by Christian Davenport – This book provides a narrative of the private space industry, focusing on the personal rivalry and cooperation between major figures like Elon Musk and Jeff Bezos as they push the boundaries of private space travel.

“The Fourth Industrial Revolution” by Klaus Schwab – Schwab discusses the ongoing transformations caused by new technologies like AI and robotics, outlining the potential impacts on global economies, business practices, and societies.

‘Fix the broken countries of the west through increased transparency, design and professional skills. Support Skills Gap Trainer.’

To see our Donate Page, click https://skillsgaptrainer.com/donate